TechnologyMarch 21, 2022

The evolution of Industrial Ethernet control system connectivity

Take a moment to reflect on where we’ve come from to better understand the Industrial Ethernet connectivity technologies that will help you achieve your goals. Beyond the point when we have connected everything to everything, which technologies will win the day? Thriving organizations are the ones who are paying attention to history.

Since the advent of electronic process control systems, many new technologies have made their way into the industry. Now, we are in the early years of another revolution that is bringing its own innovations and design philosophies. Taking a moment to reflect on where we’ve come from can help us better understand where we are going and which technologies will help us achieve our goals.

Early automation control and communication systems

When the first I/O systems were developed, the standard for control and sensing from the field relied on electromagnetic and pneumatic components, which were subject to physical degradation that limited their lifespan. In the 1960s, engineers generally organized relays into ladders of switching logic that directed electrical flows in a deterministic pattern. These relay configurations were inflexible and guaranteed to fail after a finite time, leading to the development of solid-state components that operated much more reliably.

Eventually, the same technology was applied to create compact signal sensing inputs, yielding the components needed for a complete digital input/output system. The first PLCs were built using these early I/O components and began making a splash in the automotive industry in the early 1970s.

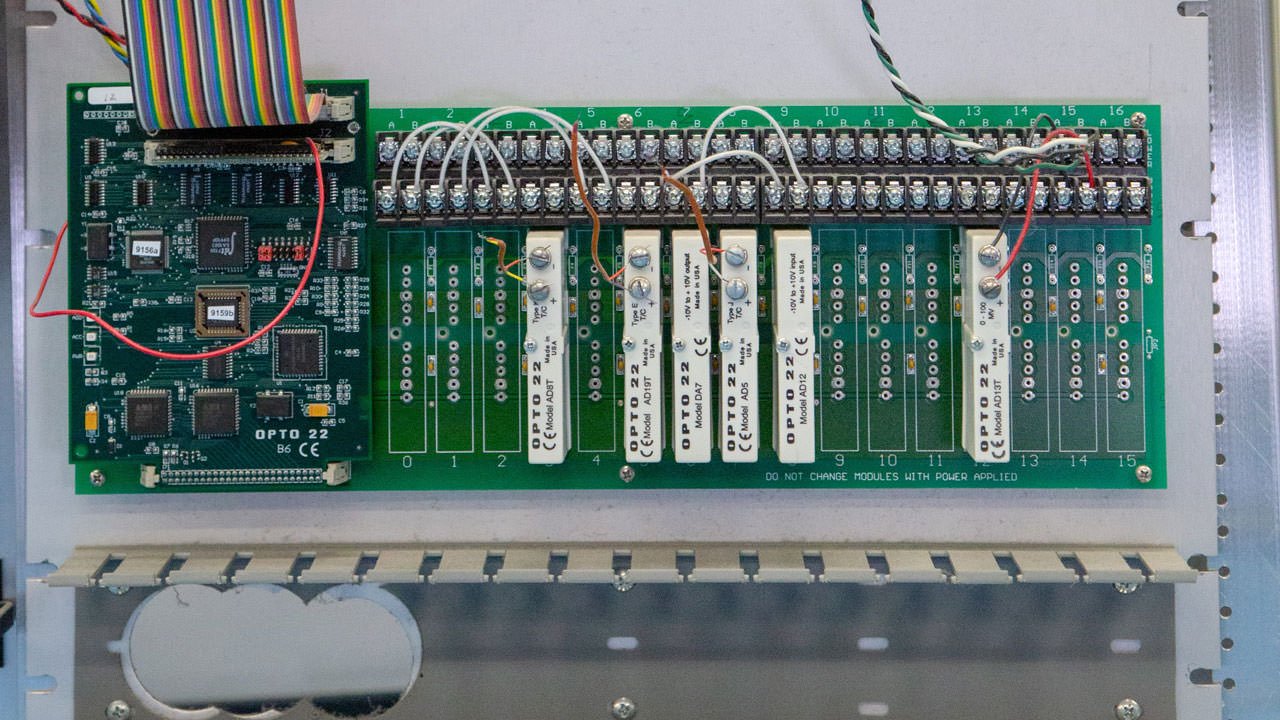

Around the same time, companies like DEC and Intel were bringing the microprocessor into the mainstream, and their users demanded options for integrating I/O into those systems as well. These high-tech computing systems required further developments in early I/O systems, which offered too little protection for sensitive computer electronics. The first generation of optically isolated digital I/O plug-in module racks quickly became the world standard form factor for computer-based control, allowing automated control to reach many more industries well into the ‘80s.

Early PACs provided a wider feature set, including discrete and analog I/O, serial communication, and high-level programming languages. (pictured: Opto 22’s mistic platform, circa 1991)

These parallel bus systems were very fast for the time but suffered from a lack of noise immunity, leading to the first serially addressable I/O. The change yielded greater protection and extended cable lengths but came with a reduction in speed, which in some cases necessitated fundamental changes in the communication architecture. Processor-intensive I/O tasks like counting and latching were embedded in the I/O module to preserve the responsiveness of the system; core communication functions were located in a dedicated co-processor. Distributed control through so-called intelligent I/O allowed control systems to manage many more I/O points without impacting the performance of the central controller.

As I/O modules and I/O processors improved, these early computer-based control solutions were able to offer analog signal processing options, something found only in large distributed control systems (DCSs) at that time. Since early ladder logic—used by PLCs as a programming language—wasn’t designed to handle analog data formats, this also led to the development of new programming languages.

These early developments exemplify a pattern that has repeated over the past several decades and yielded successively more compact and integrated devices. Later generations of control platforms offered greater computing power “per square inch” as advanced math, programming, and communication functions were incorporated into control boards. Newer generations also continued to combine and embed I/O processing circuitry in different ways. Individual modules expanded from a single I/O channel to upwards of 32 channels, and, in the case of universal I/O today, can even accept a variety of different signal types on a single physical channel.

These technologies have also become available in low-level devices such as I/O modules, sensors and transmitters, and networking components, allowing for the creation of flexible, resilient distributed architectures.

Early computer-based I/O systems allowed automated control to reach many more industries (pictured: Opto 22 B6 distributed I/O processor and first-generation optically isolated I/O modules, circa 1982)

The information revolution

Another pattern apparent from the early generations of control systems is the influence of information technologies on the industry. Even the PLC, marketed as an alternative to early general-purpose computers that were seen as unreliable and difficult to program, wouldn’t have existed without the development of computing technology. And in the 1980s and 90s, as low-cost IBM-PC alternatives began to flood the market, innovations from outside the industrial control market continued to have an influence.

PCs were still the primary control option for many systems at the time, creating concerns about reliability. It made sense for vendors to develop an industrially hardened alternative, which ultimately crystallized the I/O, networking, and programming components of early hybrid solutions into a cohesive system that would later be called a PAC, or programmable automation controller. Since PACs used the same processors that powered PCs, they were able to offer a feature set that filled a niche between low-cost, PLC-based discrete control and high-dollar, DCS-based process automation.

The PC revolution also led to the popularity of the Microsoft Windows operating system (OS) and broad interest in using its graphical interface to provide a view into what was happening on the plant floor. However, with the expansion of the automation industry in the preceding decades, these early SCADA and HMI developers needed to create a suite of proprietary software drivers to communicate with every device the system might encounter. It was a time-consuming and expensive process that generally led to very limited driver functionality in the race to expand the available portfolio of supported devices.

In the PC world, a similar trend was occurring in the market for computer peripherals such as printers, and Microsoft had developed a solution that the automation world would later adopt. It began providing device drivers pre-installed into its operating system with a common software interface that developers could use to communicate through those drivers. Instead of building their own drivers to talk to peripherals directly, programmers could talk to Windows, and Windows would talk to the peripherals.

A similar idea using Microsoft’s later Object Linking and Embedding (OLE) technology led it and a small group of automation vendors to develop OLE for Process Control, now, many years later, called Open Platform Communications or OPC. OPC defined a common specification based on a client-server model that allowed Windows-based SCADA/HMI software to communicate indirectly with plant floor hardware by means of an OPC server, which housed all the drivers needed to communicate with those devices. This made it much easier for vendors to develop software for industrial systems and improved the quantity and diversity of data that could be extracted. OPC continues to be an influential interoperability standard today.

Approaching the tipping point

However, the development of OPC did not usher in a period of peace and cooperation among automation vendors, and information technology was again needed to provide a solution. During a period that came to be known as the Fieldbus Wars, vendors took the concept of serial bussed I/O and ran with it through various communication media and protocols, each attempting to establish their combination as the dominant standard.

At the same time, Ethernet was becoming popular in enterprise office environments and was proposed as a common standard for automation as well. Initially, Ethernet was met with skepticism by a majority of the industry. But with TCP/IP becoming the standard for the worldwide web at the time, Ethernet became ubiquitous in computing hardware and was eventually accepted as a standard medium for industrial control and sensing.

Because it provided the opportunity for tighter integration with business networks, Ethernet spurred another evolution of the communication model in automation. TCP/IP became the standard for a whole new generation of I/O and device communication protocols, including a then little-known protocol called MQTT designed for lightweight machine-to-machine communication. By the time MQTT found popularity in the early 2010s, other networking advancements had been introduced to automation networks, including high-speed wireless media and smart wireless devices.

There was also greater interest in closing the gap between automation and business networks, which led to the introduction of industrial IoT gateways, and, more recently, edge-oriented controllers and I/O systems. These devices bridge the gap by combining traditional real-time control and sensing functions with communication, storage, security, and data processing functions previously found only in higher-level systems.

This combination of ever more powerful control and networking technologies has paved the way for modern concepts like the industrial internet of things (IIoT). And now, we are entering a so-called fourth industrial revolution that aims to harness highly connected and distributed automation to finally close the gap with business systems. Industry 4.0, as it is called, is shining a light on the value and significance of open-source software and long-neglected capabilities like cybersecurity for industrial systems, again drawing on innovations in IT to outfit control systems for the tasks we need them to perform.

Beyond the point when we have connected everything to everything, which technologies will win the day, and where will they take us? Who can say? But the trends up to this point seem clear, and the organizations that are thriving in the new age of industry are the ones who are paying attention to history.

Josh Eastburn, Director of Technical Marketing, Opto 22 Corporation