TechnologyNovember 28, 2018

Converged network traffic using Time Sensitive Networkng

In modern control architectures, Ethernet has become ubiquitous as a means of communicating between devices. In today’s Ethernet implementations, network hierarchy, non-standard solutions segmentation and traffic restricting techniques are often used to manage real-time network traffic flows on Ethernet networks.

Time Sensitive Networking (TSN) is a set of released and emerging IEEE standards that promises convergence of TSN networks, flattening the hierarchal architectures while retaining the requirements of the varied traffic flows. This article examines several real-time industrial traffic types, such as those used for motion control, remote I/O and events and describe various TSN mechanisms that could be used to allow their operation in a converged network. It focuses on the interaction between scheduled mechanism described in IEEE

Qbv and Quality of Service (QoS).

Key traffic parameters, such as latency and jitter, will be calculated using the various TSN mechanisms for a particular traffic type, and the effects of converging several traffic types on the same network will also be considered.

Recent work by IEEE 802, the Internet Engineering Task Force (IETF), and other standards groups has extended the number of applications that Ethernet networks can serve. These standards define standard Ethernet mechanisms for creating distributed, synchronized, real-time systems.

TSN provides mechanisms to solve industrial control applications using standard Ethernet technologies in a manner that enables convergence between and among Operational Technology (OT) and Information Technology (IT) data on a common physical network.

This article will also explore several OT traffic types (motion, I/O and events) and the effects of converging them on a single wire. The techniques described can be extended to apply to convergence of IT and OT traffic.

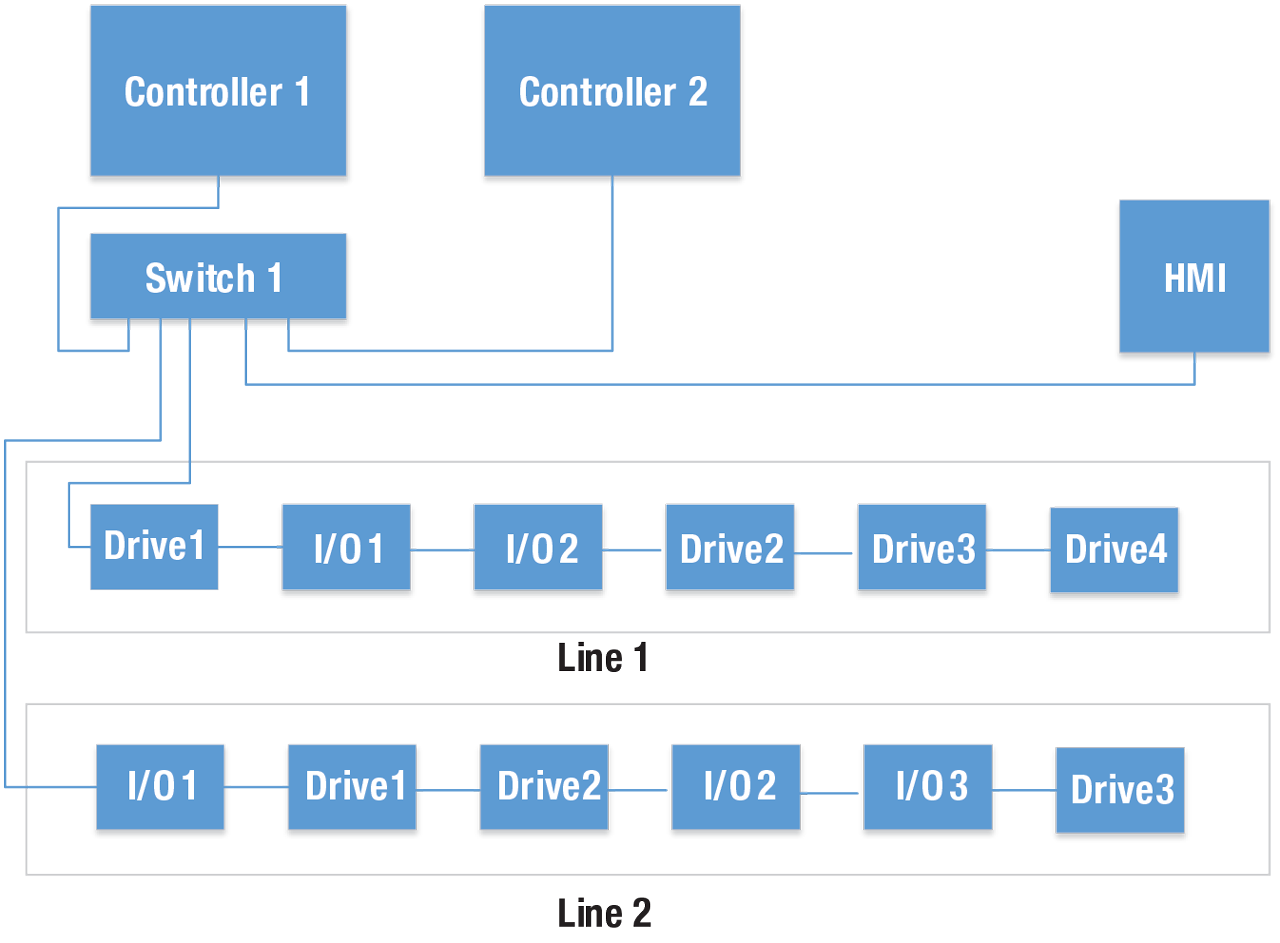

Converged Industrial Control System.

System overview

A simple industrial control system can be used to analyze converged network traffic flows. This example contains two separate processes that need to exchange information, such as one might find in conveying or baggage handling systems.

Network traffic between industrial controllers and devices like drives and I/O devices typically follow specific patterns, and have requirements on various characteristics like periodicity and latency. This introduces three traffic types (patterns) that will be evaluated individually and finally, when converged on a single wire.

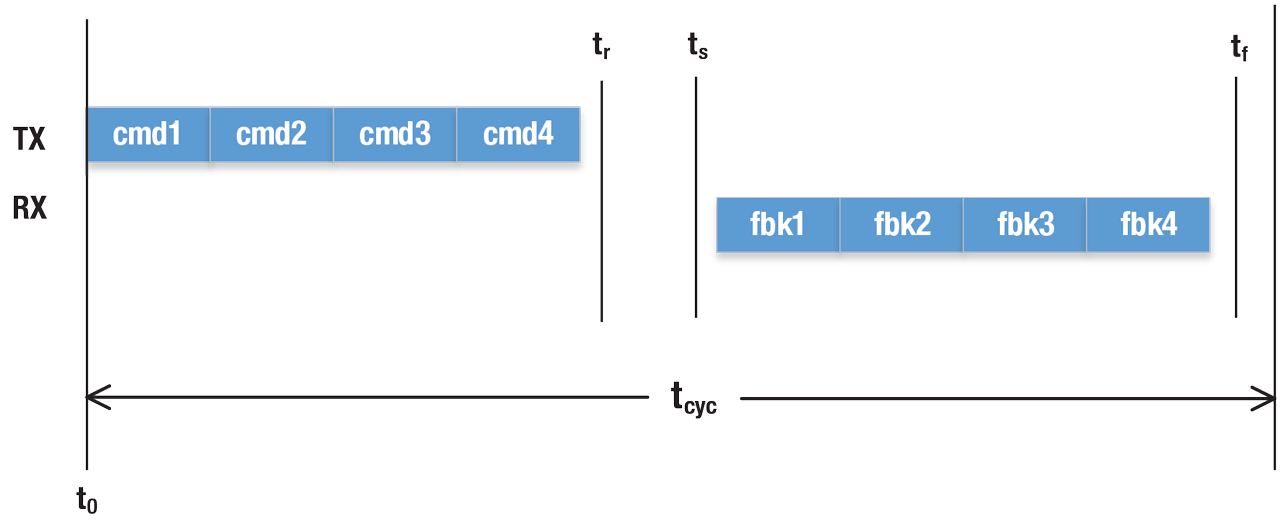

Isochronous Message Timing Diagram.

Isochronous (Motion)

Isochronous traffic is mainly found in motion control applications. This traffic pattern is cyclic. Unlike the cyclic traffic, this traffic pattern has much more stringent requirements related to message latency, application time is synchronized to network time, and the cycle times are typically shorter.

A controller, which could be controlling multiple axes, sends commands each cycle to the various drives under its control. In the same cycle, the drives return information to the motion controller (feedback). The motion controller is responsible for computing the next command while the drive is responsible for execution of the commands and supplying feedback. This cycle is repeated, often at a very high rate (1 ms or less). If the drives are expected to operate in a coordinated manner, each component of the system must have some sense of the same time, which is strictly monotonic and steadily increasing, without jumps or leaps. This allows that each drive will apply its commands and sample their feedback at the same instance of time. This further requires that the applications in the motion controller and drives are also synchronized to network time for TSN networks.

For tight control loops, reception jitter must be minimal, with no interference from other traffic. Messages need a guaranteed delivery time. If they arrive later than this deadline, they are ignored for that cycle or discarded, thus potentially affecting the control loop. Message sizes are typically fixed at design time and remain constant for each cycle. Payload sizes are typically under 100 bytes per device. This type can be used for controller-to-controller, controller-to-drive and drive-to-drive communication.

Diagram of 1-Cycle Motion Timing.

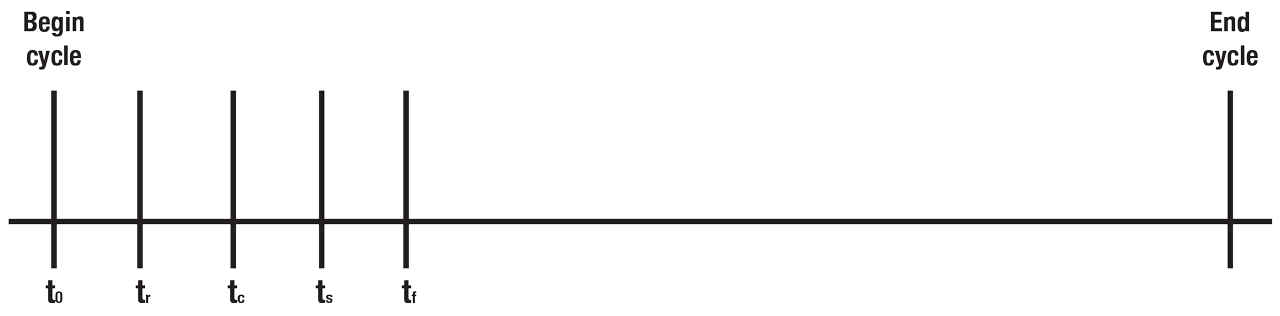

Typical 1-cycle timing model

Critical timing elements reveal that the order in which some timing elements occur may vary across implementations and timing models. Models exist for synchronizing communication in a control system and the order in which the various timing elements can vary based upon the model. What is critical is that there are periods of time in which controllers and devices are sending messages and that these messages must be received before a certain time within each cycle. IEEE Qbv defines a scheduling mechanism that ideally lends itself to manage the above need. Schedules can be defined in network infrastructure devices such that only the desired traffic can flow during these times, thus preventing interruption from ancillary traffic.

Cyclic Message Timing Diagram.

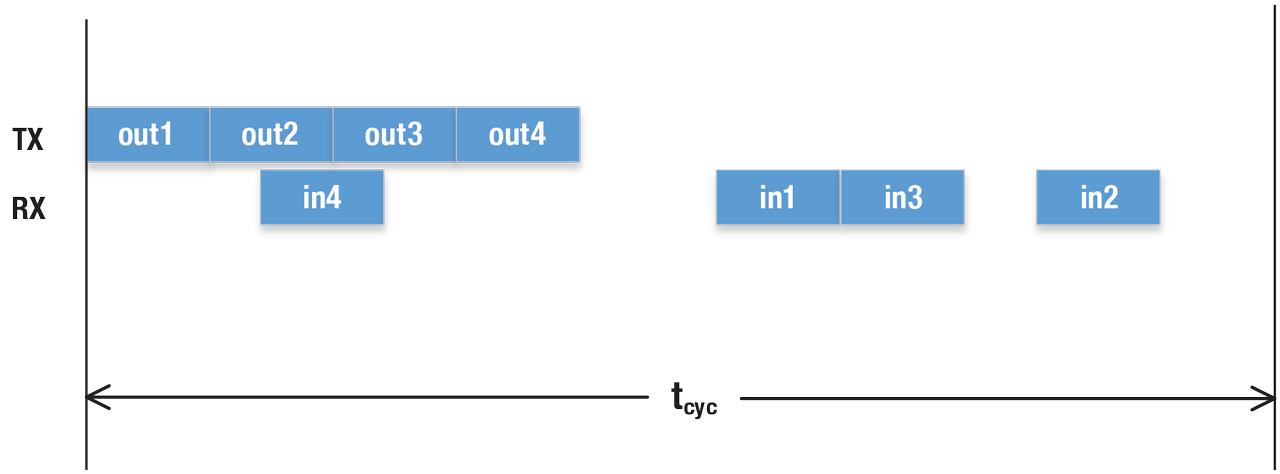

Cyclic (I/O)

Cyclic traffic that is not isochronous is typically used for remote I/O communications. The applications in devices are not synchronized to a common time. Devices sample inputs and apply outputs cyclically, which may or may not be the same as the data transmission cycle. Controllers typically send outputs to all devices one after the other. When using a client-server protocol (e.g. MODBUS), output and input messages will be clustered while in a publish/subscribe environment (e.g. EtherNet/IP I/O) output messages from the controller will be clustered while input messages from the devices will be distributed over the cycle time, since these devices are not synchronized to each other.

Here, we will only consider publish/subscribe cyclic traffic. For best control, the time between a device sending a message and its reception should be minimized, with predictable interruptions from other traffic. Messages require a defined maximum latency time. Data message sizes are fixed at design time and remain constant for each cycle. This traffic type can be used for controller to controller, controller to I/O, and I/O to I/O communication.

Alarm and control events

In a system when an input or output variable change occurs (control event), or an occurrence needs to be announced (alarm event), event messages are generated. Depending upon the event, this might be a single message, or a flurry of messages (domino effect). While the messages may be directed to different end-devices, as in the case of control events, alarm event messages are typically directed at a single device, like an HMI or SCADA system. The network must be able to handle a burst of messages without loss, up to a certain number of messages over a defined period.

For alarm messages, after this period, messages can be lost until the allowed bandwidth quantity has been restored. Applications are designed to compensate for message loss situations.

TSN overview

TSN enhances Ethernet (IEEE 802.1 and 802.3), and is foundational for the Internet of Things. TSN adds a range of functions and capabilities to Ethernet to make it more applicable to industrial applications that require more deterministic characteristics than possible in previous Ethernet implementations. Automation and control systems require devices, including the network, to perform in a deterministic way. One of TSN’s benefits is the ability to converge applications and traffic on a single, open network, while retaining deterministic behavior.

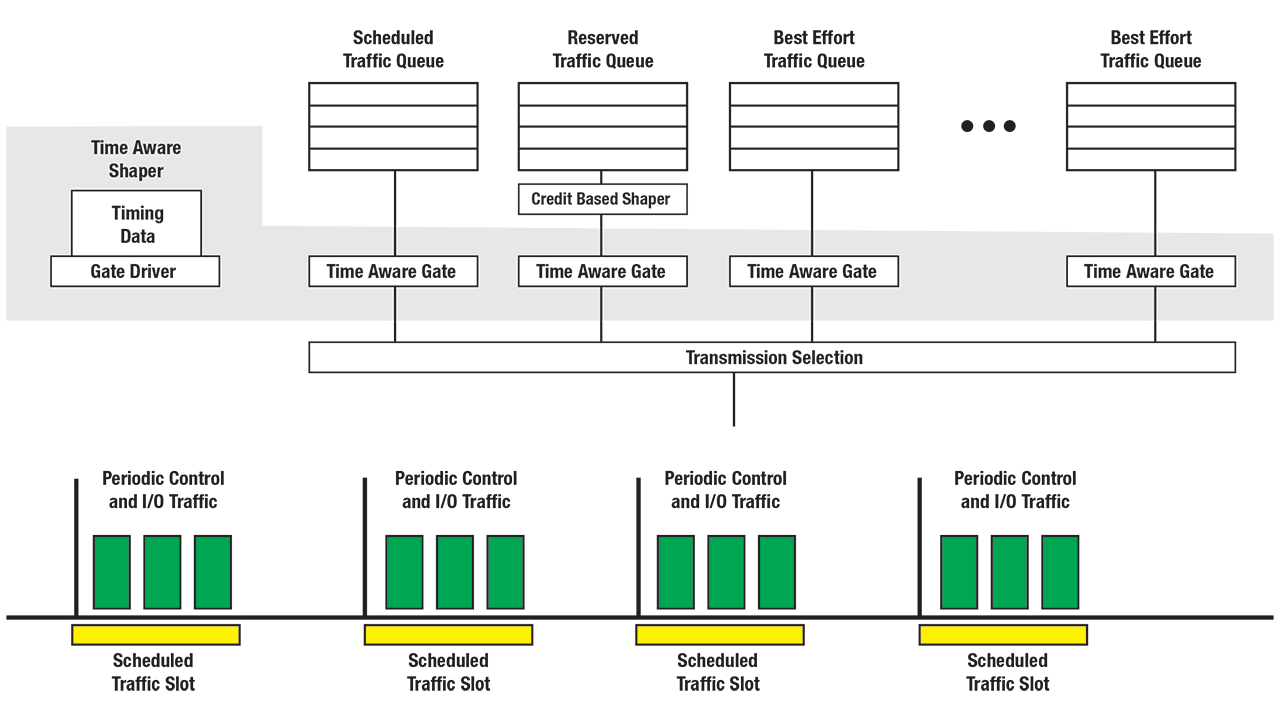

Time aware traffic shaping

Time Aware Traffic Shaping allows for scheduled traffic. In order to enforce the schedule throughout a network, the interference with lower priority traffic has to be prevented, as this would not only increase the latency but also the delivery variation (jitter). The Time Aware Shaper blocks the non-Scheduled Traffic (i.e. non-scheduled traffic is queued), so that the port is idle when the Scheduled traffic is scheduled for transmission.

QoS-strict priority

Strict priority is the default queuing mechanism for Ethernet bridges (switches). In strict priority queueing the queue with the highest number has priority over the remaining queues. When multiple Ethernet messages are queued on an interface for transmission, the queue with the highest priority having an Ethernet frame ready for transmission will transmit. Ethernet frames in lower priority queues are held until the priority of their queue becomes the highest queue with a ready Ethernet frame.

Shaped queues may have a numerically higher traffic class, but the transmission selection algorithms bound to the corresponding queues determine if such a queue is served before a lower priority queue (e.g. a queue bound to the credit-based transmission selection algorithm could have a numerically higher traffic class but would not be served if it has insufficient credits (i.e. does not have a frame ready for transmission)).

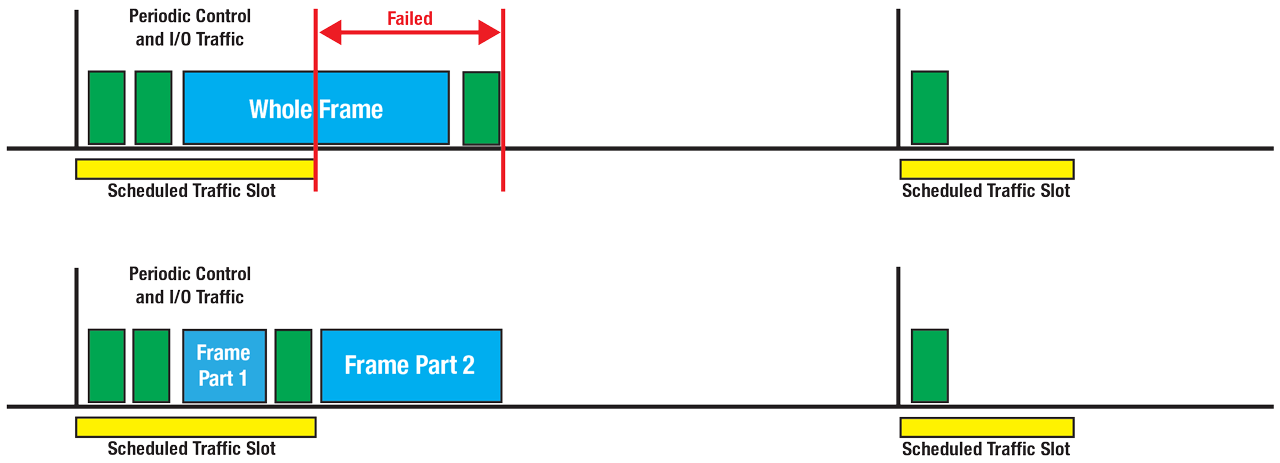

Frame Preemption and Interspersing Express Traffic.

Frame Preemption/Express Traffic

The Frame Preemption amendment specifies procedures, managed objects, and protocol extensions that:

Define a class of service for time-critical frames that requests the transmitter in a bridged LAN to suspend the transmission of a non-time-critical frame, and allows for time-critical frame(s) to be transmitted. When the time-critical frames have been transmitted, the transmission of the preempted frame is resumed. A non-time-critical frame could be preempted multiple times.

Provide for discovery, configuration, and control of preemption service for a bridge port and end station.

Ensure preemption is only enabled on a given link if both link partners have that capability.

The purpose of this amendment is to provide reduced latency transmission for scheduled, time-critical frames in a bridged LAN. A large, non-time-critical frame may start transmission ahead of the desired transmission time of time-critical frame. This condition leads to excessive latency for the time-critical frame. Transmission preemption preempts the non-time-critical frame to allow the time-critical frames to be transmitted. This provides capabilities for applications that use scheduled frame transmission to implement a real-time control network.

Cut-through switching

Cut-through switching is where a switch starts forwarding a message (packet) before the whole message has been received. Compared to store-and-forward switching, cut-through switching offers lower latency but, because the frame check sequence appears at the end of a message, the switch is not able to verify message integrity before forwarding it. Cut-through switching will forward corrupted packets, whereas a store-and-forward switch will drop them.

Pure cut-through switching is only possible when the speed of the outgoing interface is equal to, or lower than, the incoming interface speed. A switch may buffer (acting in a store-and-forward manner) a packet instead of using cut-through under certain conditions:

Speed: When the outgoing port is faster than the incoming port, the switch must buffer the entire message received from the lower-speed port before the switch can start transmitting that message out the high-speed port, to prevent underrun. (When the outgoing port is slower than the incoming port, the switch can perform cut-through switching and start transmitting that message before it is entirely received, although it must still buffer some of the message).

Congestion: When a cut-through switch decides that a message from one incoming port needs to go out through an outgoing port, but that outgoing port is already busy sending a message from a second incoming port, the switch must buffer some or all of the message from the first incoming port.

Traffic analysis

Industrial control systems require predictability of certain communications for optimal control. In today’s architectures, this is accomplished using methods such as proprietary and IEC standardized fieldbus technologies, network segmentation and isolation, over provisioning, etc. With TSN’s deterministic shaping mechanisms, these segmented traffic types can be converged in a single network while retaining traffic delivery requirements.

There are several factors that affect the time it takes for a message to exit one device and be received by another, such as network data rate, message length, whether cut-through or store-and-forward switching is used, interrupting traffic and the number of switches in the path between source and destination.

Before one can begin to understand the effects of converged traffic on a particular traffic type, each traffic type must be analyzed in isolation without the presence of any potential disturbances. This will establish a baseline upon which comparisons can be made. The time it takes for a message to be delivered to its intended destination depends upon several factors:

- message length;

- Ethernet data rate;

- number of switches the message must pass through;

- whether the switches use cut-through or store-and-forward switching.

Interference

This section will consider the effects of messages that interfere with another message as it travels to its intended destination. Only store-and-forward switching scenarios are considered

In today’s industrial control environment, devices with two external switched ports (enabling easy daisy-chaining of devices) are quite common. These devices are constructed using a three-port switch, two that are exposed to outside connections while the third is typically connected to the Central Processing Unit (CPU) of the device.

Interfering traffic can occur when a switch is already transmitting a message out a particular port and another message, destined for the same port, arrives. This newer message will have to wait for the completion of the already in progress message before it can be sent. This form of interference can happen regardless of message priorities.

Another form of interference is if a higher priority message is already queued in a switch when a lower priority message arrives (is queued). The switch will choose to send the higher priority message first, thus delaying the lower priority message.

These examples demonstrate how a message travelling through a switch can get delayed due to interference. In order to determine maximum message latencies, the time when messages arrive (ingress) and message priorities must be taken into account.

In-progress interference

In-progress interference occurs when a message destined for a particular port arrives after the switch has already decided to send another message out that same port. Message priority has no effect in this case, since the decision was made prior to the arrival of a potentially higher priority message. Assume a high priority message (HP0) is arriving on port 1 (P1) destined for port 2 (P2) and a lower priority interfering message (int0) is arriving g from port 3 (P3) or has already been queued or is currently being transmitted out of P2.

The worst-case scenario occurs when the interfering message is queued just prior to the switch deciding which message to send next and the high priority message is queued just after the switch makes this decision. In this case, the lower priority message will be sent prior to the high priority message.

This will cause a delay of the high priority message equivalent to the amount of time remaining to send a message already in progress, which could be the time for the entire message in the case of starting transmission just before the arrival (queuing) of the high-priority message. If the interfering message is shorter than the high-priority message, this initial delay is the only delay the message will experience as it travels through more switches to its destination.

Subsequent interfering messages

If a message must travel through multiple switches before reaching its destination, additional interference at each of these switches is possible. This section will analyze what happens when additional interfering messages are present at subsequent switches. The first case is when the additional interfering messages are of lower priority than the high priority message and the second case is when they are of equal or higher priority.

Lower Priority Messages

While it is interesting to examine the case where the initial lower priority message is shorter than the high priority message, it does not represent the worst-case possibility. In fact, the worst-case scenario is when the initial lower priority message is the maximum length permitted by the network. Hence, the following examples will only consider the case where the initial lower priority message (int0) is longer than the high priority message (HP0).

On a subsequent switch in the line (SW2), there are two messages arriving on port 1 (P1) destined for port 2 (P2), an initial lower priority interfering message followed by a high priority message. As in the previous example, the new lower priority interfering message should be queued just prior to the arrival (queuing) of the first interfering message so the switch will send int1 as its next message and queue int0. Two cases need to be considered.

The first is that HP0 is queued prior to the switch completing its sending of int1. In this case, when the switch performs its evaluation of which message to send next, both HP0 and int0 are already queued and the switch will decide to send HP0, since it has a higher priority, instead of int0, thus HP0 will hop in front of int0.

Higher priority interfering messages

In the previous example, all interfering messages were lower in priority than the high priority message. If the initial interfering message is, instead, at the same or higher priority as the initial high priority message, the order of messages egressing the switch will change. For a message equal in priority to the initial high priority message, it just needs to be queued prior to the initial high priority message to cause interference.

For a message of higher priority than the initial high priority message, it just needs to be queued prior to the switch deciding which message to send next.

Deriving a general formula for this use case is quite difficult. Factors such as variation of interfering message lengths, priorities and order coupled with the store-and-forward behavior potentially introducing gaps contribute to the difficulty.

Cut-through Interference

Analyzing the behavior of interfering traffic in a cut-through situation is much more complicated. First and foremost, cut-through behavior is not defined by the IEEE and, as such, is up to a vendor’s discretion regarding implementation.

For example, if a switch begins receiving a message destined for a particular port and that port is already occupied sending another message, should the switch truncate the current message, wait for the completion of the current message and begin transmitting (depending upon priority) or should it fully complete storing the message before deciding on a course of action?

Additionally, if a series of messages is being received on port 1, destined for port 2 and another message of equal priority is queued, is there an opportunity for this queued message to be transmitted or does the switch give preference to the cut-through messages? With all of these unknowns, and that the IIC document on traffic types does not recommend the use of cut-through for strict priority traffic, this document will not explore interference behaviors of cut-through switching for strict priority traffic. Cut-through switching will only be considered for scheduled traffic.

Scheduling interference

Finally, in a converged TSN network, schedules may exist that provide exclusive access or that may restrict access to a network. When a switch port allocates exclusive time for one of its queues, it will do so in a cyclic fashion. During a portion of that cycle, the queue is opened and only messages in that queue can egress the port. Therefore, messages in other queues will need to wait for that period of exclusive access to expire and for their queues to open. Additionally, as a message traverses a line of switches, it may encounter multiple scheduled windows.

Frame Preemption and Interspersing Express Traffic.

Converged traffic

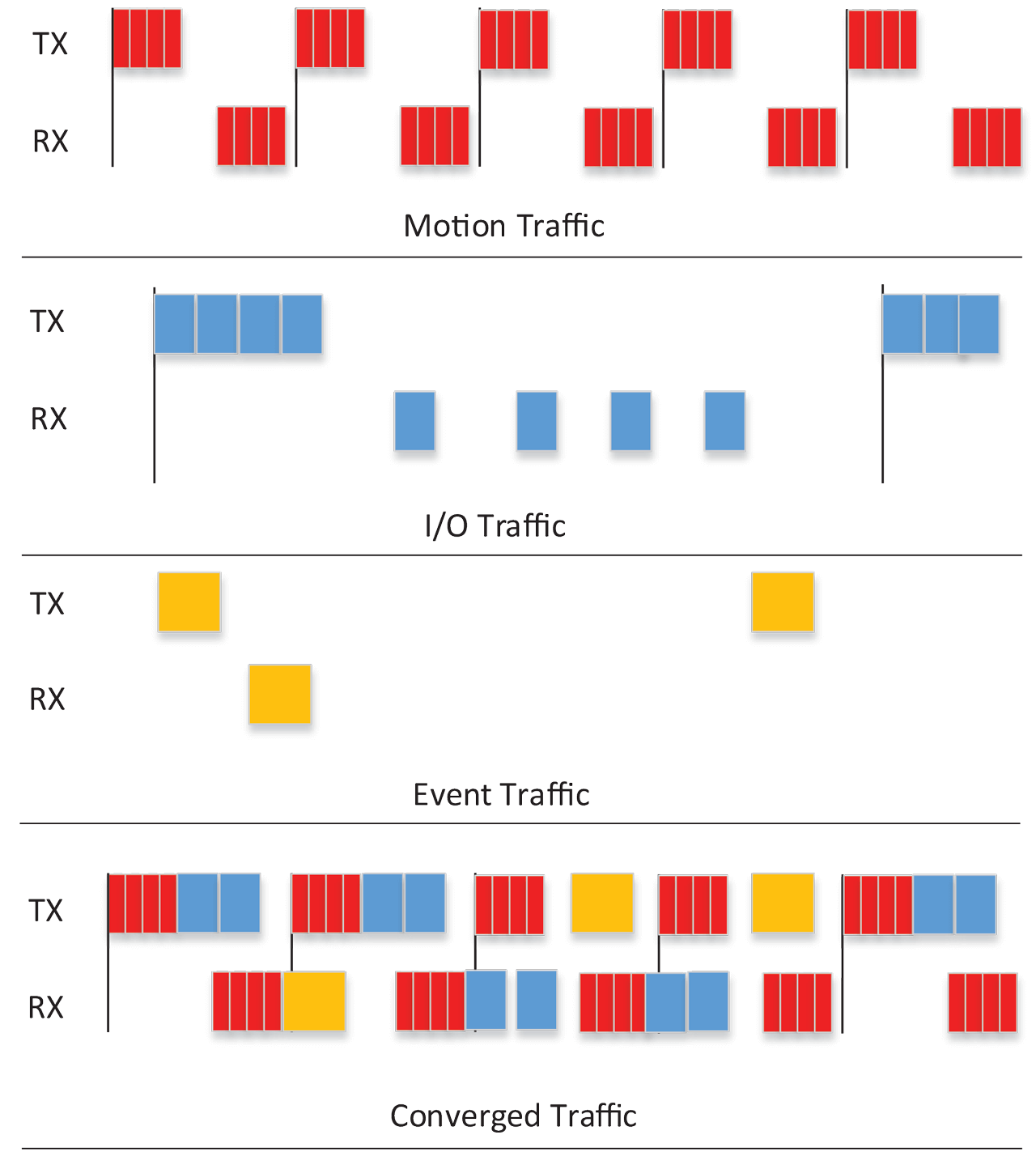

The previous sections described how messages on a switched Ethernet network could be delayed due to interference. Even with strict priority switching, interference can occur due to lower priority traffic. This section will look at three traffic types prevalent in Industrial Control Systems and demonstrate the effects of converging these traffic types on a single Ethernet network using TSN features. Coexistence of all three traffic types (motion, I/O and event) only occurs on the wires between the controllers and the switch. Line1 and Line2 only contain motion and I/O traffic for their respective controllers.

Motion traffic

While strict priority could be used for motion traffic, optimum performance is achieved when no interrupting traffic is present during motion communication messages. Hence, this article will assume that scheduled traffic shaper with exclusive access will be used for motion traffic in a converged network.

This shaped window must be synchronized with the motion applications within the various devices so that the messages from the motion applications arrive coincidently with the shaped schedule. Thus, the only variations in network latencies will be due to items like variances in clock synchronization, switch processing times, etc.

I/O traffic

This traffic type requires bounded latency where a limited amount of interference can be tolerated. Parameter requirements like period, latency and bandwidth suggest use of strict priority. To evaluate that latency guaranties are met, a network analysis (e.g., network calculus) is necessary. The network calculus needs to analyze the effect of different data streams of the same traffic type as well as the effect of the Isochronous (motion) traffic.

Event traffic

This traffic type contains traffic with two categories (alarm events and control events) which have similar characteristics but differ in latency requirements. While both categories send the data in a non-periodic manner, some upper bound for the worst-case bandwidth usage needs to be defined in the application. Additionally, two priority levels should be considered, one for the control events and second for alarm events. In a converged network, the priority of the control events compared to the I/O traffic may give precedence to one or the other or put both at the same priority.

Putting it all together

One example application assumes event traffic is a lower priority than I/O traffic and that motion traffic is scheduled. As a result, motion traffic remains unaffected by convergence while some I/O and event messages are delayed. However, TSN provides mechanisms for making sure desired bandwidth and latency requirements are met.

Summary and conclusion

There are many different types of Ethernet traffic present in industrial control systems. Currently, various techniques are used to guarantee delivery of these various traffic types for proper system operation. This article summarized several Time Sensitive Networking (TSN) features that could be used to converge traffic types on a single network.

A small, representative system with three traffic types (motion, I/O and event) was presented to analyze traffic type convergence. It showed how different types of interfering traffic can affect the latency of an Ethernet message, even in the presence of strict priority queuing. An overview of frame preemption was presented, which could be used to reduce latencies for certain traffic types. Cut-through switching was described along with its caveats.

The interference scenarios provide general use cases. Fringe use cases exist and are left as an exercise for the reader. One key takeaway is that, with the exception of scheduled traffic using exclusive gating, TSN cannot prevent interference of one message by another.

However, TSN provides the capability, along with network calculus, to determine if messages can be delivered within a certain latency, which is a huge improvement over current Ethernet technology.