TechnologyJuly 18, 2025

Current status of AI in operational technology

Rather than expecting a new AI model to help with critical processes, it should first be implemented in non-critical areas or an advisory capacity. For example, AI might first be used to analyze historical data and provide insights without the ability to directly affect operations.

Introducing and implementing Artificial Intelligence (AI) in Operational Technology (OT) has many benefits. AI-driven analytics are very advanced, enabling higher performance, new data-driven capabilities, lower energy consumption, and provide a way of circumnavigating the upcoming expert shortage by making OT more accessible to non-technical specialists.

However, the current AI solutions available are causing organizations to have two main problems: unpredictability of AI outputs and fluctuation in performance.

Challenges and risks of applying AI in OT

Probabilistic AI vs. Deterministic OT: Industrial AI suffers from the very same shortcomings as publicly available models. Traditional OT control systems (such as PLCs and SCADA) are designed to be deterministic and provide an expected stable behavior to maximize uptime. AI systems, especially those based on machine learning (like AI agents), are inherently probabilistic. In simple terms, an AI algorithm is (currently) mis-designed for the logic of OT systems.

The Data Dilemma: The main problem is that industrial data is noisy, unstructured, or incomplete. Sensors can drop readings, industrial protocols may provide terse or binary data, and “ground truth” labels for events such as component failures or quality defects are not assured. Feeding AI with this data is not viable for an accurate output. The alternative is training it with high-quality OT data. However, this itself is a challenge and could be a major limitation for companies still struggling with the basics. The Brownfield nature of many OT environments means AI must coexist with legacy systems and protocols. There are no shortcuts to it. Factories must first deal with their digitalization challenges to properly introduce AI.

The Human Factor: The Demand for “Why”: Another problem is performance fluctuation. This is due to the unique character of AI in industrial processes. The intrinsic value (and a weakness) of industrial AI is that it is designed to mimic an employee. Much like human employees, it takes time to train the AI with the necessary knowledge to avoid errors and biases. When an AI system flags an anomaly, or recommends adjusting a process parameter, engineers will demand to know why. Unlike a traditional algorithm with a clear logical rule, a machine learning model’s reasoning can be logically flawed.

Overall, there are many challenges with integrating AI into OT systems. These range from technical issues (unpredictable behavior, data issues, lack of computational power, integrating into legacy systems) to human and organizational factors (trust, skills gaps, regulatory compliance). Fortunately, we are already seeing companies gaining relevant experience and building a set of best practices to deal with these issues. In the next section, we look at some of the existing strategies that can mitigate the risks and streamline the adoption of AI in industrial environments.

Strategies to mitigate AI risks in industrial environments

It is always best to start small and safe when implementing new mitigation strategies. Rather than expecting a new AI model to help with critical processes, it should first be implemented in non-critical areas or an advisory capacity. For example, AI might first be used to analyze historical data and provide insights (e.g. suggesting maintenance windows or flagging inefficiencies) without the ability to directly affect operations.

This allows experienced operators who continuously oversee the outputs to provide feedback and optimize the model. Only when the AI has proven to be sufficiently reliable in this read-only role should it be gradually introduced into closed-loop controls.

Pilot projects in a testbed or sandbox environment, utilizing either digital twins or other simulation environments, are a must. By the time the AI is controlling something in production, it should have gone through multiple stages:

1. offline analysis

2. decision support

3. supervised automation

4. autonomous control for select and well-understood tasks

Effective use of AI in OT therefore calls for eXplainable AI (XAI) techniques, where AI decisions should be accompanied by human rationale. For example, an AI-based “industrial agent” that identifies a network fault should be able to explain its conclusion by pointing to specific anomalous metrics or error patterns, much like an experienced human technician would. By providing context, e.g. “Switch 3’s traffic is outside normal VLAN parameters, which likely caused the device to disconnect”), the AI’s actions become transparent, allowing operators to reliably verify it. This continuous improvement loop is analogous to preventive maintenance for machines, except it is for the algorithms.

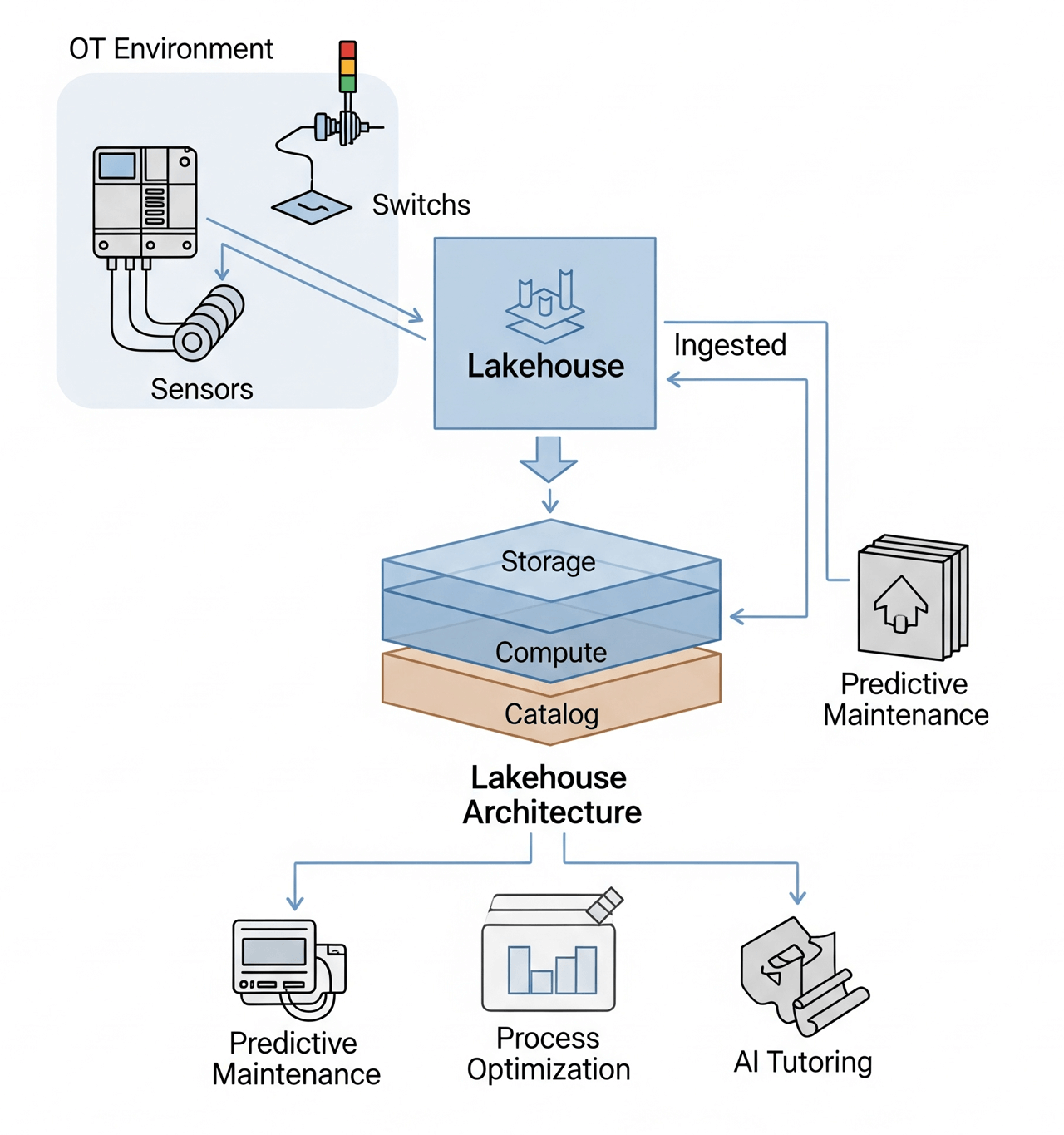

Data architecture at the core of it – the lakehouse

Another key area of discussion is unified data ingestion & storage architecture. Lakehouse Data Architecture is currently the most suitable model for effective implementation of industrial AI.

The Lakehouse combines three important building blocks:

- a storage layer for managing data persistence,

- a compute layer for handling queries and processing tasks,

- a catalog that controls metadata and schema definitions.

The Lakehouse model combines capabilities that usually require multiple data lakes to perform into a single workflow that enables organizations to perform both analytical and AI-driven workloads against a single source of truth.

Lakehouse architecture stores large amounts of data on cloud platforms like S3, Azure Data Lake Storage, or Google Cloud Storage. Costing around $20-23 per terabyte per month, this is much cheaper than the price of traditional storage systems. In complex network monitoring scenarios such as capturing detailed telemetry from thousands of switches, routers, and optical equipment terabytes worth of data can be captured and stored each year. Lakehouse now provides a way of storing multiple years of performance metric data efficiently and cheaply so that organizations can perform more accurate analyses and experience better long-term results.

There is also the schema evolution to talk about. This uses open table formats such as Delta Lake, Apache Iceberg, or Apache Hudi, and can adapt without interruption when new metric and data points are received. This enables users to integrate them and other AI contextual inputs without downtime or migration.

Lakehouse architecture is also based on open standards, so there are no vendor lock-in issues. By storing data in widely supported formats, such as Parquet or ORC, and employing standard APIs like S3, organizations have the freedom to choose from several compute engines (Spark, Trino, and DuckDB) based on their unique requirements. However, on of the biggest advantages for teams building AI-driven applications is the unified data access offered by Lakehouse. Engineers and operators can effortlessly analyze multiple data types using standard SQL queries or Python DataFrames, and built-in features like “time travel” make revisiting historical baselines or investigating past anomalies straightforward.

The Lakehouse Data Architecture is currently the most suitable model for effective implementation of industrial AI.

Use cases: AI in industrial automation

Despite the challenges, we can already see the first emerging use cases of AI in automation. The most mature and widely adopted AI use cases in OT are those that augment human decision-making.

Predictive maintenance:

One of the most common AI applications in OT is in predictive maintenance. AI is used to predict equipment failures before the fault physically occurs. From motors in conveyor belts to valves and switches on the production line, there are many areas where AI systems can monitor the performance and degradation of components to spot excessive wear and highlight impending failures with operators being alerted to any potential issues weeks or even months in advance.

An example below shows how an AI agent monitors network hardware (such as the optical transceivers in industrial switches) and notices a gradual signal strength decay. The conversation with the AI after this potential issue had been identified is as follows:

AI Agent: “After reviewing the network telemetry, I advise replacing the optical receivers in Switch-Core-3. I’ve noticed a steady decline in signal strength by approximately 0.3 dBm over the last month, without any associated link-down events. This type of degradation typically points to component aging rather than physical connection problems.”

Network Admin: “That’s going to cost around $15,000. How confident are you in this recommendation?”

AI Agent: “My confidence level is high—approximately 90%. The degradation follows a consistent, linear trend, unlike fiber contamination which usually appears as abrupt signal fluctuations during reconnection events. Historical records from 18 comparable switches indicate a similar pattern predicted optical receiver failures within 60 days at an accuracy rate of 87%. Additionally, maintenance logs do not report any fiber cleaning or reconnections during this period.”

Network Admin: “Can you display the trend analysis and provide similar cases for reference?”

AI Agent: “Certainly. Here’s the detailed analysis:

- Signal Strength Trend: Over the past 30 days, signal strength has steadily dropped from -2.1 dBm to -2.4 dBm.

- Link-Down Events: No link-down occurrences recorded in maintenance logs during this time.

- Historical Comparison: Of 18 previous similar situations, 16 resulted in optical receiver failures within a 60-day timeframe.

- Potential Impact: Estimated downtime costs approximately $50,000 per hour if failure occurs.

- Optimal Maintenance Window: Next scheduled maintenance is planned for next weekend.

- Risk Assessment: There is an 87% likelihood of receiver failure within the next 45 days, prior to the subsequent scheduled maintenance.”

Process optimization

AI is also increasingly used to fine-tune industrial processes to achieve optimal performance. For example, AI might discover that a slight adjustment in oven temperature and conveyor speed in a furnace process yields a higher output with lower energy per unit under certain humidity conditions. An automotive plant also used AI to analyze its assembly line and found that rearranging some tools and parts (e.g. moving a component bin closer to a workstation) could save each worker a few seconds, adding up to significant productivity gains over time.

AI tutoring

Another emerging use case is AI tutoring. AI can collect information on the knowledge and experience gained by seasoned engineers and store the data so that newcomers can learn from the engineers without them being directly present. With many industries facing the generation exchange, AI tools can help mitigate the negative impacts.

For example, an “AI agent” could observe how an expert troubleshoots a particular machine over time, through either recorded data or natural language explanations, and learn to replicate that diagnostic process. Later, a less experienced technician could query the AI: “Why is Machine A producing off-spec product?” and the AI might respond with a reasoning similar to what the expert would have done. The outcome is a workforce that can onboard faster (since newbies have an AI tutor to consult) and more experienced professionals can focus on high-value activities with greater care.

The above examples demonstrate that, when thoughtfully implemented, AI can significantly enhance industrial operations, from reducing downtime and waste to improving safety and worker efficiency. Companies at the forefront of Industry 4.0 are treating AI as a key tool in the automation toolbox, complementing classical control systems and human expertise.

As eXplainable AI (XAI) techniques mature and data architectures become more unified, the journey from supervised AI to trusted autonomous control will accelerate. For industrial organizations, the essential first step remains the same: building the foundational data and networking infrastructure today to support the intelligent operations of tomorrow.